Face Detect V1.0(TrialFaceSDK)

Capability Introduction

Interface Capability

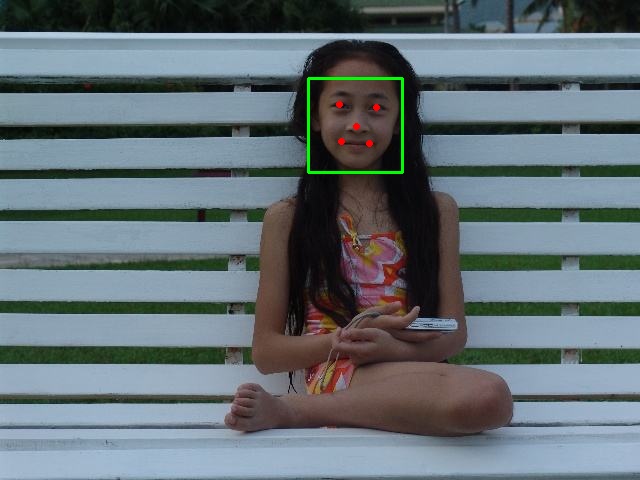

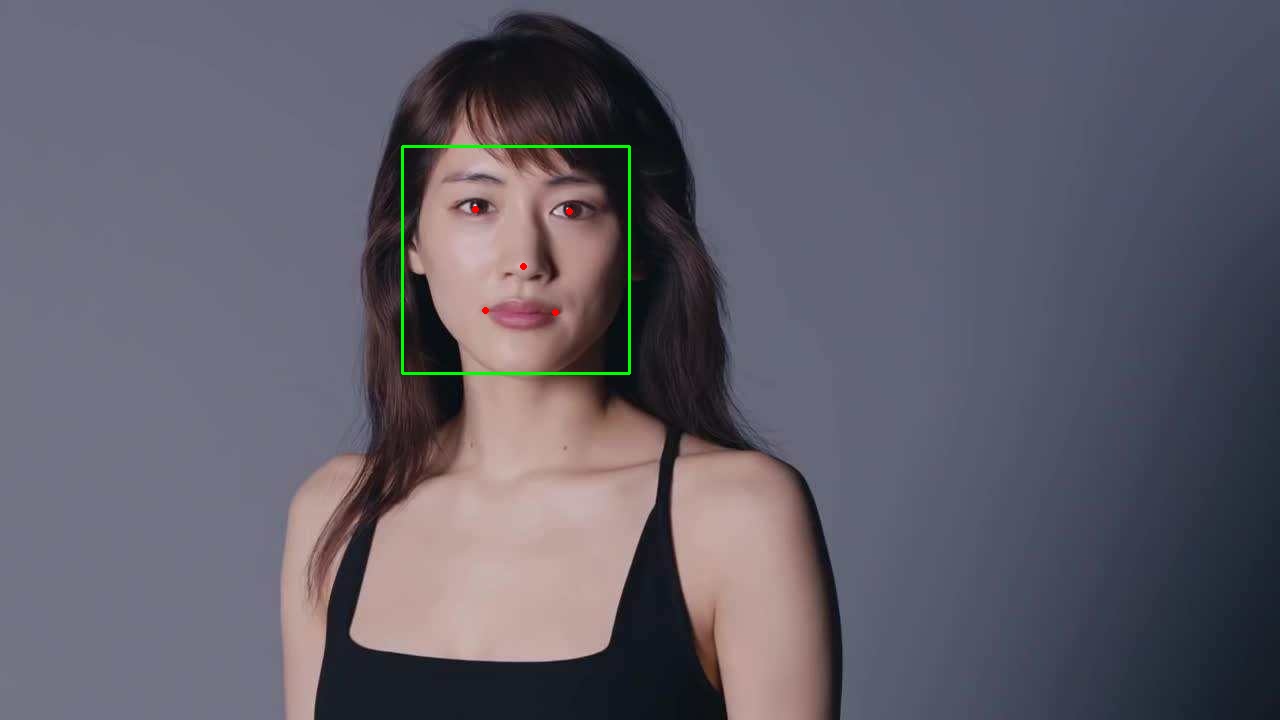

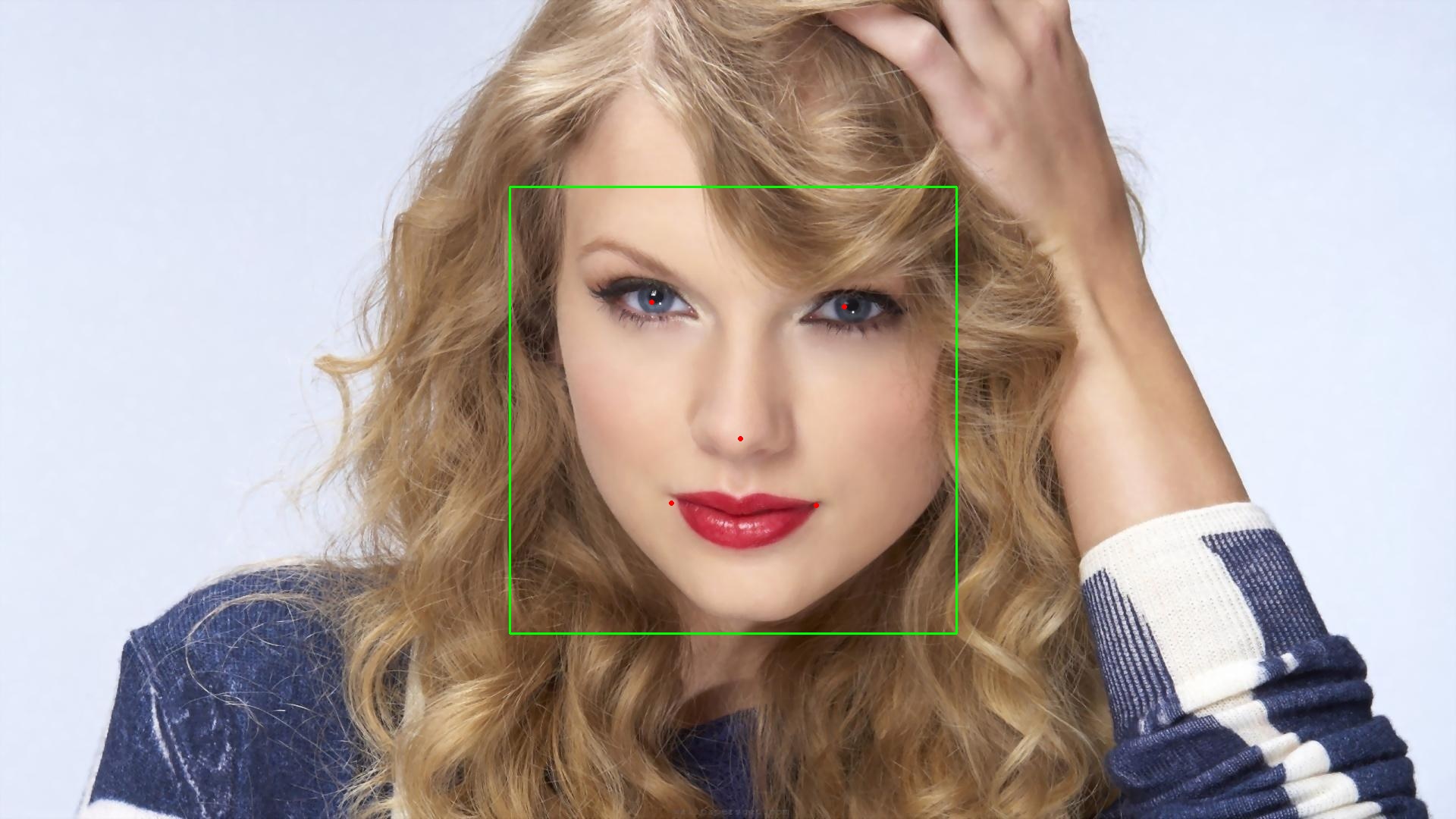

- Face Detect: Detect face in images, and calculate possibility, angle, position of key points(eyes, nose, mouse)

Dependencies

- CPU: MSVCP140、VCRUNTIME140

Invoke Method

Example Code 1st

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

using namespace glasssix;

using namespace glasssix::longinus;

int main()

{

//do not support GPU in TrialFaceSDK

int device = -1;//using CPU when device is less than zero, otherwise use the GPU numbered by 'device', device is set to -1 by default.

LonginusDetector detector;

detector.set(FRONTALVIEW, device);

cv::Mat img = cv::imread("../TestImage/2.png");

cv::Mat gray;

cv::cvtColor(img, gray, CV_BGR2GRAY);

std::vector<FaceRect> rects = detector.detect(gray.data, gray.cols, gray.rows, gray.step[0], 24, 1.1f, 3, false, false);

for (int i = 0; i < rects.size(); i++)

cv::rectangle(img, cv::Rect(rects[i].x, rects[i].y, rects[i].width, rects[i].height), cv::Scalar(0, 255, 0));

cv::imshow("hehe", img);

cv::waitKey(0);

return 0;

}Class Description: RomanciaDetector

Member Function

Capability: set detect method and devicevoid set(DetectionType detectionType, int device);

| Parameter | Parameter Type | Value | Illustration | Remark |

|---|---|---|---|---|

| detectionType | enum DetectionType | FRONTALVIEW FRONTALVIEW_REINFORCE MULTIVIEW MULTIVIEW_REINFORCE |

detect effect and time consuming both ascend | |

| device | int | <0>=0 |

use cpu use gpu numberd by ‘device’ |

Member Functionvoid load(std::vector<std::string> cascades, int device = -1);

Capability: load classifier model

| Parameter | Parameter Type | Value | Illustration | Remark |

|---|---|---|---|---|

| cascades | std::vector<std::string> |

user input | each element in vector represents a model file | model file in xml format |

| device | int | <0>=0 |

use cpu use gpu numberd by ‘device’ |

Member Functionstd::vector<FaceRect> detect(unsigned char *gray, int width, int height, int step, int minSize, float scale, int min_neighbors, bool useMultiThreads = false, bool doEarlyReject = false);

Capability: detect and locate face in single-channel image

| Parameter | Parameter Type | Value | Illustration | Remark |

|---|---|---|---|---|

| gray | unsigned char * |

user input | address of single-channel image data | image data should store continuously |

| width | int | user input | width of single-channel image | |

| height | int | user input | height of single-channel image | |

| step | int | user input | bytes of each row in single-channel image | |

| minSize | int | effect value>=24 |

smallest detect window size | detected face area>=minSize |

| scale | float | >1 |

expand or shrink ratio of image | >1.1 |

| min_neighbors | int | >=0 |

candidate boxes around face area | 3 by default |

| useMultiThreads | bool | true false |

use multi-thread use single-thread |

take effect when using cpu, false by default |

| doEarlyReject | bool | true false |

adopt early reject don’t adopt |

detect speed promote, with detect effect descend, false by default |

- Return Value

std::vector<FaceRect>, FaceRect contains detected face area information

Struct Description: FaceRect

| Member Variable | Type | Illustration | Remark |

|---|---|---|---|

| x | int | x coordinate of left-top corner | |

| y | int | y coordinate of left-top corner | |

| width | int | width of face area | |

| height | int | height of face area | |

| neighbors | int | number of candidate boxes around face area | |

| confidence | double | possibility to be a face |

Example Code 2nd:

1 |

|

Member Functionstd::vector<FaceRectwithFaceInfo> detect(unsigned char *gray, int width, int height, int step, int minSize, float scale, int min_neighbors, int order = 0, bool useMultiThreads = false, bool doEarlyReject = false);

Capability: detect and locate face in single-channel image

| Parameter | Parameter Type | Value | Illustration | Remark |

|---|---|---|---|---|

| gray | unsigned char * |

user input | address of single-channel image data | image data should store continuously |

| width | int | user input | width of single-channel image | |

| height | int | user input | height of single-channel image | |

| step | int | user input | bytes of each row in single-channel image | |

| minSize | int | effect value>=24 |

smallest detect window size | detected face area>=minSize |

| scale | float | >1 |

expand or shrink ratio of image | >1.1 |

| min_neighbors | int | >=0 |

candidate boxes around face area | 3 by default |

| order | int | array of face image data:NCHW/NHWC | order=0(NCHW),otherwise(NHWC) | |

| useMultiThreads | bool | true false |

use multi-thread use single-thread |

take effect when using cpu, false by default |

| doErlyReject | bool | true false |

adopt early reject don’t adopt |

detect speed promote, with detect effect descend, false by default |

- Return Value

std::vector<FaceRectwithFaceInfo>Type, FaceRectwithFaceInfo contains information: detected face area, key points, possibility

Member Functionvoid extract_faceinfo(std::vector<FaceRectwithFaceInfo> face_info, std::vector<std::vector<int>>& bboxes, std::vector<std::vector<int>>& landmarks);

Capability: extract face area to save in bboxes, and extract key points to save in landmarks

| Parameter | Parameter Type | Value | Illustration | Remark |

|---|---|---|---|---|

| face_info | std::vector<FaceRectwithFaceInfo> |

detected face information | ||

| bboxes | std::vector<std::vector<int>> |

bboxes.size(): number of faces detected, bboxes[i][0]:x,bboxes[i][1]:y,bboxes[i][2]:width,bboxes[i][3]:height | ||

| landmarks | std::vector<std::vector<int>> |

landmarks.size():number of faces detected, landmarks[i][0]:x_lefteye,landmarks[i][1]:y_lefteye,landmarks[i][2]:x_righteye,landmarks[i][3]:y_righteye,landmarks[i][4]:x_nose,landmarks[i][5]:y_nose,landmarks[i][6]:x_leftmouse,landmarks[i][7]:y_leftnouse,landmarks[i][8]:x_rightmouth,landmarks[i][9]:y_rightmouth |

Member Functionvoid extract_biggest_faceinfo(std::vector<FaceRectwithFaceInfo> face_info, std::vector<std::vector<int>>& bboxes, std::vector<std::vector<int>>& landmarks);

Capability: extract biggest face area to save in bboxes, and extract key points of biggest face area to save in landmarks

| Parameter | Parameter Type | Value | Illustration | Remark |

|---|---|---|---|---|

| face_info | std::vector<FaceRectwithFaceInfo> |

detected face information | ||

| bboxes | std::vector<std::vector |

bboxes.size(): number of faces detected, bboxes[i][0]:x,bboxes[i][1]:y,bboxes[i][2]:width,bboxes[i][3]:height | ||

| landmarks | std::vector<std::vector |

landmarks.size():number of faces detected, landmarks[i][0]:x_lefteye,landmarks[i][1]:y_lefteye,landmarks[i][2]:x_righteye,landmarks[i][3]:y_righteye,landmarks[i][4]:x_nose,landmarks[i][5]:y_nose,landmarks[i][6]:x_leftmouse,landmarks[i][7]:y_leftnouse,landmarks[i][8]:x_rightmouth,landmarks[i][9]:y_rightmouth |

Member Functionstd::vector<unsigned char> alignFace(const unsigned char* ori_image, int n, int channels, int height, int width, std::vector<std::vector<int>> bbox, std::vector<std::vector<int> >landmarks);

Capability: Align Face

| Parameter | Parameter Type | Value | Illustration | Remark |

|---|---|---|---|---|

| ori_image | const unsigned char* |

single-channel image used for detect face area | ||

| n | int |

number of single-channel image | ||

| channels | int |

channel of single-channel image | ||

| height | int |

height of single-channel image | ||

| width | int |

width of single-channel image | ||

| bbox | std::vector<std::vector<int>> |

bboxes.size(): number of faces detected, bboxes[i][0]:x,bboxes[i][1]:y,bboxes[i][2]:width,bboxes[i][3]:height | ||

| landmarks | std::vector<std::vector<int>> |

landmarks.size():number of faces detected, landmarks[i][0]:x_lefteye,landmarks[i][1]:y_lefteye,landmarks[i][2]:x_righteye,landmarks[i][3]:y_righteye,landmarks[i][4]:x_nose,landmarks[i][5]:y_nose,landmarks[i][6]:x_leftmouse,landmarks[i][7]:y_leftnouse,landmarks[i][8]:x_rightmouth,landmarks[i][9]:y_rightmouth |

- Return Value

std::vector<unsigned char>, alignedFace data stored in vector

Face Match

Capability Introduction

Interface Capability

- Face Match: input detect result of consecutive video frame, then judge whether faces belong to a same person. Take effect when camera position is fixed.

Invoke Method

Example Code 3rd:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

using namespace glasssix;

using namespace glasssix::longinus;

int main(int argc, char** argv)

{

cv::Mat frame;

cv::Mat gray;

int frame_extract_frequency = 5; // frequency of extracting one frame

cv::VideoCapture capture("..\\data\\group.mp4");

if (!capture.isOpened())

{

std::cout << "Reading Video Failed !" << std::endl;

getchar();

return 0;

}

int frame_num = capture.get(cv::CAP_PROP_FRAME_COUNT);

int device = -1;

LonginusDetector detector;

detector.set(MULTIVIEW_REINFORCE, device);

// loop through every frame in the video

for (int i = 0; i < frame_num - 1; i++)

{

capture >> frame;

cv::cvtColor(frame, gray, cv::COLOR_RGB2GRAY);

if (i % frame_extract_frequency == 0)

{

// retrieve a number of rects, it calls detector.detect(), which returns frontal face detections.

std::vector<FaceRect> rects = detector.detect(gray.data, gray.cols, gray.rows, gray.step[0], 24, 1.1f, 3, false, false);

auto results = detector.match(rects, frame_extract_frequency);// you can also use std::vector<FaceRectwithFaceInfo>, modify detector.detect()

// show the face rects and string id on every frame

for (size_t j = 0; j < results.size(); j++) {

cv::Rect temp_(results[j].rect.x, results[j].rect.y, results[j].rect.width, results[j].rect.height);

cv::rectangle(frame, temp_, cv::Scalar(0, 0, 255));

cv::putText(frame, results[j].id, temp_.tl(), cv::FONT_HERSHEY_SIMPLEX, 0.5, cv::Scalar(255, 255, 255), 1, CV_AA);

} // end-for-j

//matcher_.print_map();

} // end-if-mod-frequency

// display 1 frame every num frames

if (i % frame_extract_frequency == 0) {

cv::imshow("test", frame);

cv::waitKey(5);

}

} // end-for-i

return 0;

}Class Description: Matcher

Member Function

Capability: set an uuid for each input face area, judge whether it’s new in video frames.std::vector<Match_Retval> match(std::vector<FaceRect> &faceRect, const int frame_extract_frequency);

| Parameter | Parameter Type | Value | Illustration | Remark |

|---|---|---|---|---|

| faceRect | std::vector<FaceRect> |

– | get by function LonginusDetector.detect() | |

| frame_extract_frequency | int | positive integer | interval between two frames to be dealt with |

- Return Value

std::vector<Match_Retval>Type, Match_Retval contains detected face area information and uuid

Member Functionstd::vector<Match_Retval> match(std::vector<FaceRectwithFaceInfo> &faceRect, const int frame_extract_frequency);

Capability: set an uuid for each input face area, judge whether it’s new in video frames.

| Parameter | Parameter Type | Value | Illustration | Remark |

|---|---|---|---|---|

| faceRect | std::vector<FaceRectwithFaceInfo> |

– | get by function LonginusDetector.detect() | |

| frame_extract_frequency | int | positive integer | interval between two frames to be dealt with |

- Return Value

std::vector<Match_Retval>Type, Match_Retval contains detected face area information and uuid

Struct Description: Match_Retval

| Member Variable | Type | Illustration | Remark |

|---|---|---|---|

| rect | FaceRect | face area | |

| id | std::string | 32bit uuid | |

| is_new | bool | flag to judge whether this face area belongs to a new person |

Example Code 4th:

1 |

|

Longinus Performance

Test three face images(size: 640 * 480,1280 * 720,1920 * 1080) on platform i7-8700K: loop 1000 times and calculate average detect time.

Basic Information of Test Platform

| Item | Description |

|---|---|

| Operating System | Windows 10 Enterprise 64bit |

| Processor | Intel(R) Core(TM) i7-8700K CPU @ 3.70GHz |

| Core and Thread | 6 Cores and 12 Threads |

| RAM | 32GB |

| Compiler | MSVC 19.5 |

| OpenMP | Yes |

| SIMD Instruction Set | AVX2 |

Test Report

Parameter: minSize=48, scale=1.2, minNeighbors=3, useMultiThreads=false, doEarlyReject=false

Detect Time(ms):

| Image_Size | FRONTALVIEW | FRONTALVIEW_REINFORCE | MULTIVIEW | MULTIVIEW_REINFORCE |

|---|---|---|---|---|

| 640 * 480 | 11.72 | 25.72 | 34.06 | 41.42 |

| 1280 * 720 | 26.14 | 45.44 | 69.09 | 85.07 |

| 1920 * 1080 | 76.59 | 122.38 | 155.89 | 186.86 |

Declaration: Test face images come from Internet, all rights belong to original Author. If we infringed your legal rights, please contact us to erase images. Thanks!